My boss recently asked me how I'd build out SimCity's online systems today if I knew everything three years ago that I know now. I didn't hesitate. "I'd take a good, long look at

Scala and, by extension,

Lift."

Scala has a lot that appeals to the me of today. I like its hand-in-hand support for functional programming and emphasis on immutable objects. I've gotten used to both concepts with

Erlang, and I've come to appreciate that programming paradigm for building robust, scalable systems. But Scala also offers imperative syntax and mutable objects if you need them.

Scala has native support for the

actor model abstraction of concurrency, which I first encountered with Erlang (Scala's syntax is openly lifted from that language's). The actor model makes it much easier to manage and reason about concurrent code, and Scala supports two major implementations: actors tied to particular threads or event-based actors thrown onto whatever thread is available, maximizing resource utilization.

And, unlike something like

node.js or even Erlang, Scala has a huge universe of libraries at its disposal thanks to its bytecode-compliance with Java.

All good stuff. I thought it was time to do something real with it.

Before I dove into a large system, I thought I'd write a simple application. We have a group of binary files on SimCity that are very important to the game but, being binary, aren't easy to debug when something goes wrong. So I thought I'd do a quick project on my own to write a Java-based parser for the files and compare it to a Scala-based version. Little admin tools like this or other low-risk sections of the code base are often good ways to try out new tech and see if it will fit into the larger project. This code didn't leverage any of the concurrency systems in Scala; I just wanted a simple program.

One reason people like Scala — and, indeed, the many other JVM-compatible languages — is conciseness. Spend any time at all with Scala, Ruby, or — it sometimes seems — any other language, and Java's verboseness begins to feel like cement around your hands, sucking time and productivity away from your programming. Plus, more code, even Java's boilerplate, means more potential bugs.

As a simple measure, I compared the the non-whitespace characters in my two versions. I structured the programs the same way, mirroring class structures and refactored methods. But I used the support that each language gave me for keeping things concise.

The Java version was 5258 characters. The Scala version? 3099. The Java version was almost 70 percent larger.

| Java Code | Scala Code |

|---|

| 5258 | 3099 |

Scala's biggest single win was with a file full of small classes that defined types of data within the file I was parsing. The Java version was 160 percent bigger than the Scala one.

This makes sense. Let's say you wanted an immutable class in Java to represent a point in 3D space. This is about as concise as you can get it.

public class Point {

public final float x, y, z;

public Point(float _x, float _y, float _z) {

x = _x;

y = _y;

z = _z;

}

}

Here's the equivalent Scala code.

class Point(val x: Float, val y: Float, val z: Float)

But Scala offers lots of little aids as well. You rarely need Java's omnipresent semicolons; you don't need to declare types as often, since Scala can usually infer them; you don't need to explicitly type "return" at the end of a function, because the last result in the function is the return value; you don't have to declare that you throw exceptions. The list of little things goes on and obviously adds up.

Functional programming, too, offers some conciseness. I needed a routine to read an unsigned int out of a variable-length byte array. In the Java version, I wrote this:

private static int byteArrayToInt(byte[] bytes) throws IOException {

long retVal = 0;

for (int i = 0; i < bytes.length; i++) {

retVal = (retVal << 8) | ((long)bytes[i] & 0xff);

}

return (int)retVal;

}

In the Scala version, I wrote this:

private def byteArrayToInt(bytes: Array[Byte]) = {

((0L /: bytes) {(current,newByte) => (current << 8).toLong | (newByte & 0xff).toLong}).toInt

}

(The references to longs in this int-parsing code are to cope with the fact that I needed to read very large unsigned ints from the files, which Java defaults to interpreting as signed integers. The way to get around that is to write into a larger memory space, namely a long.)

You could argue that the Scala version is concise to the point of obtuseness, even if you're familiar with the functional-programming mainstay foldLeft operation it represents. I agree that there's a balance to be struck. In particular, I'm not sold on the /: operator for foldLeft; I might opt for spelling it out to be more clear.

For functional programming geeks, note that, to the extent it can, Scala offers tail-call optimization on recursive calls.

But things weren't all sunshine and roses on the Scala side. Here is the average time to run my program for each version, timed over 1000 iterations.

| Java Time | Scala Time |

|---|

| 88ms | 182ms |

To some extent, I expected this. Scala has to compile down to Java bytecode, which means that all that syntactic sugar and functional programming and closure support must turn into Java concepts somewhere. Even my little program generates a slew of extra classes and, presumably, lots of extra code that has to be navigated. Also, I think it's reasonable to imagine that immutable objects necessarily mean that new objects have to be created more often than they would in mutable space, where you can change an object directly. Finally, I've been working with Java in one form or another for 16 years or so; I've been working in Scala for about three days. So I'm likely missing out on performance tips.

Though I admit this seems like a huge difference for some extra classes and objects and missing an optimization step or two. Even if it's correct, I'm still of the mindset that greater productivity and easier, safer concurrency are big wins. (Note that you could always switch to imperative mode in key sections if performance demanded it, in much the same way that some sites offload work to C programs.)

If I were really honest about how I'd rebuild SimCity, I'd probably use Erlang, where you have to do things functionally, have a virtual machine that supports what you're doing, and have native systems for handling failures with aplomb. But Scala at least offers the potential of hiring from the pool of Java programmers, whereas Erlang really doesn't. (On the other hand, the vast majority of Java programmers I've seen seem to be couched safely and comfortably in Java, so wouldn't necessarily adapt. But Erlang would be a way bigger change, I think.)

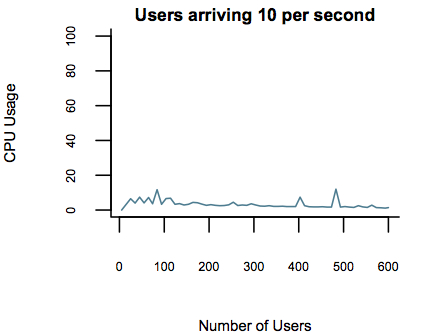

I'm going to keep plunking away at Scala and try to build something a bit more real with it. Event-based actors might be a bit slower, but if they can scale vastly better, that may matter more to a site.